Whether “False Negatives” or “False Positives”, the Answer May Not Lie Just in New or Improved Technologies, but in an Improved Mix of New Technologies and More Forgiving Regulatory Requirements

On January 24, 2020, Jo Ann Barefoot had Thomas Otting, Comptroller of the Currency, as her guest on her podcast. The link is available at Barefoot Otting Podcast. Among other things, the Comptroller talked about BSA/AML, or as he put it “AML/BSA”.

Approximately 12:00 minutes into the podcast, the Comptroller had this to say about BSA/AML:

“Are we doing it the most effective way? … what we’re doing, is it helping us catch the bad guys as they’re coming into the banking industry and taking advantage of it?”

In a discussion on technology trends, the Comptroller spoke about how banks are using new technologies to learn about their customers and for risk management. Beginning at the 20:45 mark, he stated:

“Today our AML/BSA relies upon a lot of systems to kick out a lot of data that often has an enormous amount of false negatives associated with it that requires a lot of resources to go through that false negative, and I think if we can get to the point where we have better fine-tuned data with artificial intelligence about tracking information is and the type of activities that are occurring, I think ultimately we’ll have better risk management practices within the institutions as well.”

Having been a guest on Jo Ann’s podcast myself (see Richards Podcast), I know how unforgiving the literal transcript of a podcast can be, so it is fair to write that the Comptroller’s point was that the current systems kick out a lot of false negatives that require a lot of manual investigations; and better data and artificial intelligence could reduce those false negatives, resulting in greater efficiencies and better risk management.

But it is curious that he refers to “false negatives” – which are transactions that do not alert but should have alerted – rather than “false positives” – which are transactions that did alert and, after being investigated, prove not to be suspicious and therefore falsely alerted. The Comptroller has many issues to deal with, and it’s easy to confuse false negatives with false positives. In fairness, his ultimate point was well made: the current regulatory requirements and expectations around AML monitoring, alerting, investigations, and reporting have resulted in a regime that is not efficient (he didn’t addressed the effectiveness of the SAR regime).

At the 21:30 mark, Jo Ann Barefoot commented on the recent FinTech Hackathon she hosted that looked at using new technology to make suspicious activity monitoring and reporting more efficient and effective, and stated that “we need to get rid of the false flags in the system” (I got the sense that she was uncomfortable with using the Comptroller’s phrase of “false negatives” – Jo Ann is well-versed in BSA and AML and familiar with the issue of high rates of false positives). Comptroller Otting replied:

“If you think just in the SARs space, that 7 percent of transactions kind of hit the tripwire, and then ultimately about 2 percent generally have SARs filed against them, that 5 percent is an enormous amount of resources that organizations are dedicating towards that compliance function that I’m convinced that with new technology we can improve that process.”

Again, podcast transcripts can be unforgiving, and I believe the point that the Comptroller was making was that a small percentage of transactions are alerted on by AML monitoring systems, and an even smaller percentage of those alerts are eventually reported in SARs. His percentages, and math, may not foot back to any verifiable data, but his point is sound: the current AML monitoring, alerting, investigations, and reporting system isn’t as efficient as it should be and could be (again, he didn’t address its effectiveness).

I don’t believe that the inefficiencies in the current AML system are wholly caused by outdated or poorly deployed technology. Rather, financial institutions are (rightfully) deathly afraid of a regulatory sanction for missing a potentially suspicious transaction, and will err on the side of alerting and filing on much more than is truly suspicious. For larger institutions, it will cost them a few million dollars more to run at a 95% false positive rate rather than an 85% rate, or 75% rate (I address the question of what is a good false positive rate in one of the articles, below), but those institutions know that by doing so, they avoid the hundreds of millions of dollars in potential fines for missing that one big case, or series of cases, that their regulator, with hindsight, determines should have been caught.

Running an AML monitoring and surveillance program that produces 95% false positives is not “helping us catch the bad guys that are taking advantage of the banking industry” as the Comptroller noted at the beginning of the podcast. Perhaps a renewed and coordinated, cooperative effort between technologists, bankers, BSA/AML professionals, law enforcement, and the Office of the Comptroller of the Currency can lead us to a monitoring/surveillance regime enhanced with more effective technologies and better feedback on what is providing tactical and strategic value to law enforcement … and, hopefully, tempered by a more forgiving regulatory approach.

Below are two articles I’ve written on monitoring, false positive rates, the use of artificial intelligence, among other things. Let’s work together to get to a more effective and efficient AML regime.

Rules-Based Monitoring, Alert to SAR Ratios, and False Positive Rates – Are We Having The Right Conversations?

This article was published on December 20, 2018. It is available at RegTech Article – Are We Having the Right Conversations?

There is a lot of conversation in the industry about the inefficiencies of “traditional” rules-based monitoring systems, Alert-to-SAR ratios, and the problem of high false positive rates. Let me add to that conversation by throwing out what could be some controversial observations and suggestions …

Current Rules-Based Transaction Monitoring Systems – are they really that inefficient?

For the last few years AML experts have been stating that rules-based or typology-driven transaction monitoring strategies that have been deployed for the last 20 years are not effective, with high false positive rates (95% false positives!) and enormous staffing costs to review and disposition all of the alerts. Should these statements be challenged? Is it the fact the transaction monitoring strategies are rules-based or typology-driven that drives inefficiencies, or is it the fear of missing something driving the tuning of those strategies? Put another way, if we tuned those strategies so that they only produced SARs that law enforcement was interested in, we wouldn’t have high false positive rates and high staffing costs. Graham Bailey, Global Head of Financial Crimes Analytics at Wells Fargo, believes it is a combination of basic rules-based strategies coupled with the fear of missing a case. He writes that some banks have created their staffing and cost problems by failing to tune their strategies, and by “throwing orders of magnitude higher resources at their alerting.” He notes that this has a “double negative impact” because “you then have so many bad alerts in some banks that they then run into investigators’ ‘repetition bias’, where an investigator has had so many bad alerts that they assume the next one is already bad” and they don’t file a SAR. So not only are the SAR/alert rates so low, you run the risk of missing the good cases.

After 20+ years in the AML/CTF field – designing, building, running, tuning, and revising programs in multiple global banks – I am convinced that rules-based interaction monitoring and customer surveillance systems, running against all of the data and information available to a financial institution, managed and tuned by innovative, creative, courageous financial crimes subject matter experts, can result in an effective, efficient, proactive program that both provides timely, actionable intelligence to law enforcement and meets and exceeds all regulatory obligations. Can cloud-based, cross-institutional, machine learning-based technologies assist in those efforts? Yes! If properly deployed and if running against all of the data and information available to a financial institution, managed and tuned by innovative, creative, courageous financial crimes subject matter experts.

Alert to SAR Ratios – is that a ratio that we should be focused on?

A recent Mid-Size Bank Coalition of America (MBCA) survey found the average MBCA bank had: 9,648,000 transactions/month being monitored, resulting in 3,908 alerts/month (0.04% of transactions alerted), resulting in 348 cases being opened (8.9% of alerts became a case), resulting in 108 SARs being filed (31% of cases or 2.8% of alerts). Note that the survey didn’t ask whether any of those SARs were of interest or useful to law enforcement. Some of the mega banks indicate that law enforcement shows interest in (through requests for supporting documentation or grand jury subpoenas) 6% – 8% of SARs.

So I argue that the Alert/SAR and even Case/SAR (in the case of Wells, Package/Case and Package/SAR) ratios are all of interest, but tracking to SARs filed is a little bit like a car manufacturer tracking how many cars it builds but not how many cars it sells, or how well those cars perform, how well they last, and how popular they are. The better measure for AML programs is “SARs purchased”, or SARs that provide value to law enforcement.

How do you determine whether a SAR provides value to Law Enforcement? One way would be to ask Law Enforcement, and hope you get an answer. That could prove to be difficult. Can you somehow measure Law Enforcement interest in a SAR? Many banks do that by tracking grand jury subpoenas received to prior SAR suspects, Law Enforcement requests for supporting documentation, and other formal and informal requests for SARs and SAR-related information. As I write above, an Alert-to-SAR rate may not be a good measure of whether an alert is, in fact, “positive”. What may be relevant is an Alert-to-TSV SAR rate (see my previous article for more detail on TSV SARs). What is a “TSV SAR”? A SAR that has Tactical or Strategic Value to Law Enforcement, where the value is determined by Law Enforcement providing a response or feedback to the filing financial institution within five years of the filing of the SAR that the SAR provided tactical (it led to or supported a particular case) or strategic (it contributed to or confirmed a typology) value. If the filing financial institution does not receive a TSV SAR response or feedback from law enforcement or FinCEN within five years of filing a SAR, it can conclude that the SAR had no tactical or strategic value to law enforcement or FinCEN, and may factor that into decisions whether to change or maintain the underlying alerting methodology. Over time, the financial institution could eliminate those alerts that were not providing timely, actionable intelligence to law enforcement, and when that information is shared across the industry, others could also reduce their false positive rates.

Which leads to …

False Positive Rates – if 95% is bad … what’s good?

There is a lot of lamenting, and a lot of axiomatic statements, about high false positive rates for AML alerts: 95% or even 98% false positive rates. I’d make three points.

First, vendors selling their latest products, touting machine learning and artificial intelligence as the solution to high false positive rates, are doing what they should be doing: convincing consumers that their current product is out-dated and ill-equipped for its purpose by touting the next, new product. I argue that high false positive rates are not caused by the current rules-based technologies; rather, they’re caused by inexperienced AML enthusiasts or overwhelmed AML experts applying rules that are too simple against data that is mis-labeled, incomplete, or simply wrong, and erring on the side of over-alerting and over-filing for fear of regulatory criticism and sanctions.

If the regulatory problems with AML transaction monitoring were truly technology problems, then the technology providers would be sanctioned by the regulators and prosecutors. But an AML technology provider has never been publicly sanctioned by regulators or prosecutors … for the simple reason that any issues with AML technology aren’t technology issues: they are operator issues.

Second, are these actually “false” alerts? Rather, they are alerts that, at the present time, based on the information currently available, do not rise to the level of either (i) requiring a complete investigation, or (ii) if completely investigated, do not meet the definition of “suspicious”. Regardless, they are now valuable data points that go back into your monitoring and case systems and are “hibernated” and possibly come back if that account or customer alerts at a later time, or there is another internally- or externally-generated reason to investigate that account or customer.

Third, if 95% or 98% false positive rates are bad … what is good? What should the target rate be? I’ll provide some guidance, taken from a Treasury Office of Inspector General (OIG) Report: OIG-17-055 issued September 18, 2017 titled “FinCEN’s information sharing programs are useful but need FinCEN’s attention.” The OIG looked at 314(a) statistics for three years (fiscal years 2010-2012) and found that there were 711 314(a) requests naming 8,500 subjects of interest sent out by FinCEN to 22,000 financial institutions. Those requests came from 43 Law Enforcement Agencies (LEAs), with 79% of them coming from just six LEAs (DEA, FBI, ICE, IRS-CI, USSS, and US Attorneys’ offices). Those 711 requests resulted in 50,000 “hits” against customer or transaction records by 2,400 financial institutions.

To analogize those 314(a) requests and responses to monitoring alerts, there were 2,400 “alerts” (financial institutions with positive matches) out of 22,000 “transactions” (total financial institutions receiving the 314(a) requests). That is an 11% hit rate or, arguably, a 89% false positive rate. And keep in mind that in order to be included in a 314(a) request, the Law Enforcement Agency must certify to FinCEN that the target “is engaged in, or is reasonably suspected based on credible evidence of engaging in, terrorist activity or money laundering.” So Law Enforcement considered that all 8,500 of the targets in the 711 requests were active terrorists or money launderers, and 11% of the financial institutions positively responded.

With that, one could argue that a “hit rate” of 10% to 15% could be optimal for any reasonably designed, reasonably effective AML monitoring application.

But a better target rate for machine-generated alerts is the rate generated by humans. Bank employees – whether bank tellers, relationship managers, or back-office personnel – all have the regulatory obligation of reporting unusual activity or transactions to the internal bank team that is responsible for managing the AML program and filing SARs. For the twenty plus years I was a BSA Officer or head of investigations at large multi-national US financial institutions, I found that those human-generated referrals resulted in a SAR roughly 40% to 50% of the time.

An alert to SAR ratio goal of machine-based alert generation systems should be to get to the 40% to 50% referral-to-SAR ratio of human-based referral generation programs.

Flipping the Three AML Ratios with Machine Learning and Artificial Intelligence (why Bartenders and AML Analysts will survive the AI Apocalypse)

This article was posted on December 14, 2018. It remains the most viewed article on my website. It is available at RegTech Article – Flipping the Ratios

Machine Learning and Artificial Intelligence proponents are convinced – and spend a lot of time trying to convince others – that they will disrupt and revolutionize the current “broken” AML regime. Among other targets within this broken regime is AML alert generation and disposition and reducing the false positive rate (more on false positives in another article!). The result, if we believe the ML/AI community, is a massive reduction in the number of AML analysts that are churning through the hundreds and thousands of alerts, looking for the very few that are “true positives” worthy of being labelled “suspicious” and reported to the government.

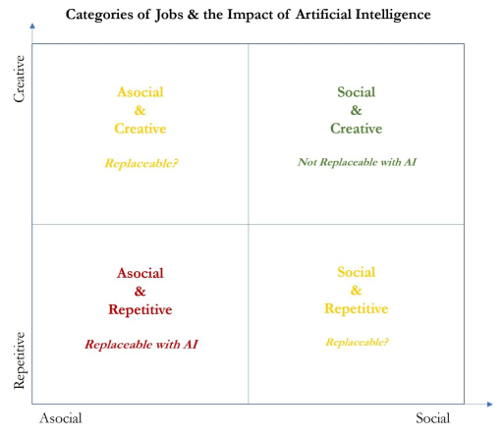

But is it that simple? Can the job of AML Analyst be eliminated or dramatically changed – in scope and number of positions – by machine learning and AI? Much has been and continues to be written about the impact of artificial intelligence on jobs. Those writers have categorized jobs along two axes – a Repetitive-to-Creative axis, and an Asocial-to-Social axis – resulting in four “buckets” of jobs, with each bucket of jobs being more or less likely to be disrupted or even eliminated:

A good example is the “Social & Repetitive” job of Bartender: Bartenders spend much of their time doing very routine, repetitive tasks: after taking a drink order, they assemble the correct ingredients in the correct amounts, and put those ingredients in the correct glass, then present the drink to the customer. All of that could be more efficiently and effectively done with an AI-driven machine, with no spillage, no waste, and perfectly poured drinks. So why haven’t we replaced bartenders? Because a good bartender has empathy, compassion, and instinct, and with experience can make sound judgments on what to pour a little differently, when to cut-off a customer, when to take more time or less with a customer. A good bartender adds value that a machine simply can’t.

Another example could be the “Asocial & Creative” (or is it “Social & Repetitive”?) job of an AML Analyst: much of an AML Analyst’s time is spent doing very routine, repetitive tasks: reviewing the alert, assembling the data and information needed to determine whether the activity is suspicious, writing the narrative. So why haven’t we replaced AML Analysts? Because a good Analyst, like a good bartender, has empathy, compassion, and instinct, and with experience can make sound judgments on what to investigate a little differently, when to cut-off an investigation, when to take more time or less on an investigation. A good Analyst adds value that a machine simply can’t.

Where AI and Machine Learning, and Robot Process Automation, can really help is by flipping the three currently inefficient AML ratios:

- The False Positive Ratio– the currently accepted, but highly axiomatic and anecdotal, ratio is that 95% to 98% of alerts do not result in SARs, or are “false positives” … although no one has ever boldly stated what an effective or acceptable false positive rate is (even with ROC curves providing some empirical assistance), perhaps the ML/AI/RPA communities can flip this ratio so that 95% of alerts result in SARs. If they can do this, they can also convince the regulatory community that this new ratio meets regulatory expectations (because as I’ll explain in an upcoming article, the false positive ratio problem may be more of a regulatory problem than a technology problem).

- The Forgotten SAR Ratio– like false positive rates, there are anecdotes and some evidence that very few SARs provide tactical or strategic value to law enforcement. Recent Congressional testimony suggests that ~20% of SARs provide TSV (tactical or strategic value) to law enforcement … perhaps the ML/AI/RPA communities can help to flip this ratio so that 80% of SARs are TSV SARs. This also will take some effort from the regulatory and law enforcement communities.

- The Analysts’ Time Ratio– 90% of an AML Analyst’s time can be spent simply assembling the data, information, and documents needed to investigate a case, and only 10% of their time thinking and using their empathy, compassion, instinct, judgment, and experience to make good decisions and file TSV SARs … perhaps the ML/AI/RPA communities can help to flip this ratio so that Analysts spend 10% of their time assembling and 90% of their time thinking.

We’ve seen great strides in the AML world in the last 5-10 years when it comes to applying machine learning and creative analytics to the problems of AML monitoring, alerting, triaging, packaging, investigations, and reporting. My good friend and former colleague Graham Bailey at Wells Fargo designed and deployed ML and AI systems for AML as far back as 2008-2009, and the folks at Verafin have deployed cloud-based machine learning tools and techniques to over 1,600 banks and credit unions.

I’ve outlined three rather audacious goals for the machine learning/artificial intelligence/robotic process automation communities:

- The False Positive Ratio – flip it from 95% false positives to 5% false positives

- The Forgotten SAR Ratio – flip it from 20% TSV SARs to 80% TSV SARs

- The Analysts’ Time Ratio – flip it from 90% gathering data to 10% gathering data

Although many new AML-related jobs are being added – data scientist, model validator, etc. – and many existing AML-related jobs are changing, I am convinced that the job of AML Analyst will always be required. Hopefully, it will shift over time from being predominantly that of a gatherer of information and more of a hunter of criminals and terrorists. But it will always exist. If not, I can always fall back on being a Bartender. Maybe …