An 82nd Airborne soldier trains with a Black Hornet mini-drone before deploying to Afghanistan.

WASHINGTON: “AI is hard… and robots are dumb.” That was how one participant summed up a panel of artificial intelligence experts hosted by Army Futures Command. But AI is also essential to victory in future wars, the experts agree. The biggest stumbling black now may be having humans micromanage them.

How do you resolve this paradox? That’s what the Army and DARPA are trying to find out. The physical Robotic Combat Vehicles the Army is using in a series of field experiments are largely remote-controlled. Each RCV has a human gunner and a human driver; they’re just not sitting inside the vehicle. But in virtual wargames, the Army is putting real soldiers in charge of simulated robots that can operate much more autonomously – if the humans let them.

Qinetiq’s Robotic Combat Vehicle – Light (RCV-L)

A DARPA-Army program called SESU (System-of-Systems Enhanced Small Unit) is simulating a future company-sized unit – 200 to 300 soldiers – reinforced by hordes of highly autonomous drones and ground robots, explained the panelist. (Reporters were allowed to cover the Army Futures Command conference if we didn’t name individual participants).

“The whole concept of the program is these swarms will become fire and forget,” the SESU expert said. “Given a mission they’ll be able to self-organize, recognize the environment, recognize threats, recognize targets, and deal with them.” AI can’t manage this in the messy real world yet, and current Pentagon policy wouldn’t allow it anyway, but AI can do it in the radically simplified environment of a simulation, which DARPA and the Army used to try out future tactics.

“[When] we gave the capabilities to the AI to control [virtual] swarms of robots and unmanned vehicles,” he said, “what we found, as we ran the simulations, was that the humans constantly want to interrupt them.”

An Air Force operator controls a Ghost Robotics “dog” during an ABMS “on-ramp” exercise at Nellis Air Force Base.

One impact of human micromanagement is obvious. It slows things down. It’s true for humans as well — a human soldier who has to ask his superiors for orders will react more slowly than one empowered to take the initiative. It’s an even bigger brake on an AI, whose electronic thought processes can cycle far faster than a human’s neurochemical brain. An AI that has to get human approval to shoot will be beaten to the draw by an AI that doesn’t.

If you have to transmit an image of the target, let the human look at it, and wait for the human to hit the “fire” button, “that is an eternity at machine speed,” he said. “If we slow the AI to human speed…we’re going to lose.”

The second problem is the network. It must work all the time. If your robot can’t do X without human permission, and it can’t get human permission because it and the human can’t communicate, your robot can’t do X at all.

A Predator ground control station. The drone requires two human crew to operate it by remote control, a pilot and a sensor/weapons specialist.

You also need a robust connection, because the computer can’t just text: “Win war? Reply Y/N.” It has to send the human enough data to make an informed decision, which for use of lethal weapons requires sending at least one clear picture of the target and, in many cases, video. But transmitting video takes the kind of high-bandwidth, long-range wireless connection that’s hard to keep unbroken and stable when you’re on Zoom at home, let alone on the battlefield where the enemy is jamming your signals, hacking your network, and bombing any transmitter they can trace.

The third problem is the most insidious. If humans are constantly telling the AI what to do, it’ll only do things humans can think of doing. But in simulated conflicts from chess to go to Starcraft, AI consistently surprises human opponents with tactics no human ever imagined. Most of the time, the crazy tactics don’t actually work, but if you let a “reinforcement learning” AI do trial and error over thousands or millions of games – too many for a human to watch, let alone play – then it will eventually stumble onto brilliant moves.

(This is how an AI outsmarts a human, by the way: It’s a lot stupider, but it’s also a lot faster, so it can throw out a lot more stupid ideas and still produce some shockingly good ones).

Go Master Ke Jie defeated by AlphaGo

“You probably don’t want to expect it to behave just like a human,” said an Army researcher whose team has run hundreds of thousands of virtual fights. “That’s probably one of the main takeaways from these simulated battles.”

“It’s very interesting,” agreed a senior Army scientist, “to watch how the AI discovers, on its own,… some very tricky and interesting tactics. [Often you say], ‘oh whoa, that’s pretty smart, how did it figure out that one?’”

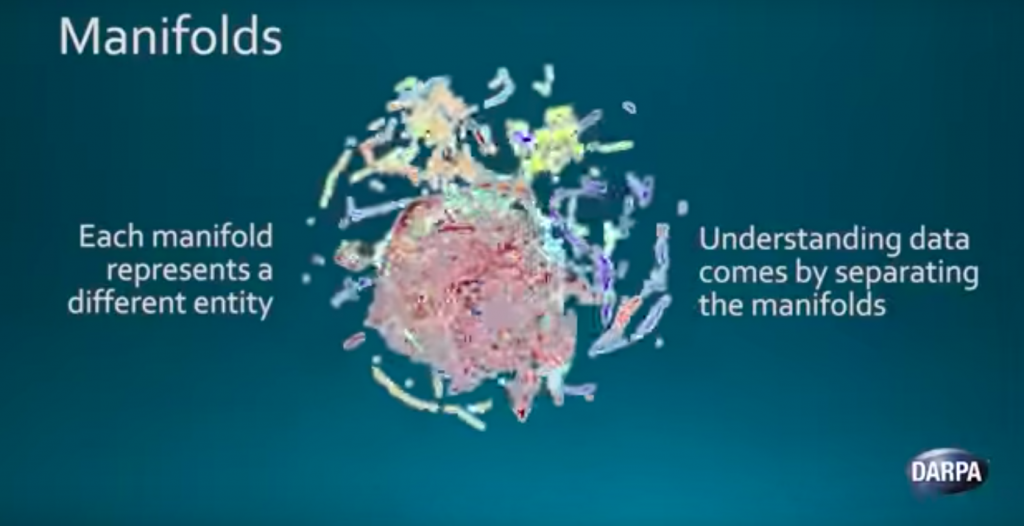

DARPA and the Intelligence Community are working on hard on “explainable AI:” one that not only crunches data, performs mysterious math, and outputs a conclusion but actually explains why it came to that conclusion in terms a human can understand.

Unfortunately, machine learning operates by running complex calculations of statistical correlations in enormous datasets and most people can’t begin to follow the math. Even the AI scientists who wrote the original equations can’t manually check every calculation a computer makes. If you require your AI to only use logic that humans can understand, it’s a bit like asking a police dog to track suspects by only following scents a human can smell, or asking Michelangelo to paint exclusively in black and white for the benefit of the colorblind.

“There’s been an over-emphasis on explainability,” one private-sector scientist said. “That would be a huge, huge limit on AI.”

Modern machine learning AI relies on the fact that, in any large set of data, there will emerge clusters of data points that correspond to things in the real world.

But it’s difficult to trust a new machine. One of the older panelists recounted how, after getting their first digital calculator in the 1970s, they double-checked everything on a slide rule for months. That was time-consuming, but at least it was physically possible. By contrast, there’s no way a human double-checker can keep up with calculations constantly being made by an AI.

So maybe you don’t try. We already rely every day on automated processes we don’t understand, although we certainly don’t let them decide when to take a human life.

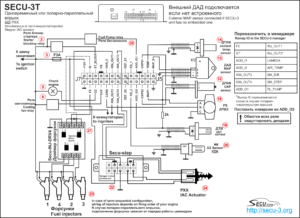

Diagram of an electronic fuel injection control system

“There are very sophisticated computations that are happening in my smart phone when it takes pictures,” the senior Army scientist said. “There are some very sophisticated computations in the engine of my car as it decides how much fuel to inject in each cylinder at each moment. I do not want to participate in those decisions. I should not participate in those decisions. I must not be allowed to participate in those decisions.”

“There is an unfortunate tendency for the humans to try to micromanage AI,” the scientist continued. “Everybody will have to get used to the fact that AI exists. It’s around us. It’s with us, and it thinks and acts differently than we do.”

“Decisionmakers need to understand,” agreed the expert on the SOSU experiments, “that an AI, at some point, will have to be let go.”

A few years after that elder panelist came to terms with his new calculator, George Lucas made the original Star Wars. In its climatic scene, pilot Luke Skywalker hears the disembodied voice of his mentor in his head telling him to “let go,” trust his instincts, and use the Force. So Luke turns off his targeting computer and makes the million-to-one shot by eye. If the AI experts have it right, future warriors may have to turn off their instincts and trust the computer.

Major trends and takeaways from the Defense Department’s Unfunded Priority Lists

Mark Cancian and Chris Park of CSIS break down what is in this year’s unfunded priority lists and what they say about the state of the US military.